Sunday, 30 December 2018

Tutorial: How to record HTTP SOAP requests and responses

We have just added a new tutorial on How to record and replay HTTP SOAP requests and responses to create API mocks. It will show you how to use Traffic parrot to simulate a SOAP API.

Wednesday, 19 December 2018

3 bitter types of technical debt

We have noticed a pattern on the projects we have worked on for our clients. Technical debt develops in three common categories. The interest you will be paying on that debt looks very different those cases.

Eventually repaid

Some teams never allow technical debt to grow to levels where they even talk about technical debt. It's just business, as usual, to refactor on daily basis.

| Very low negligible technical debt |

Other teams may start with a noticeable level of technical debt because they are taking on work on legacy systems, but they reduce it as they go along by refactoring while adding new features.

| Eventually repaid technical debt |

The amount of interest you pay on technical debt is eventually so low, you barely notice it.

Sustainable

| Sustainable technical debt |

So, they are continually repaying the interest on the technical debt, but the amount of the debt stays the same. The difference between “eventually repaid” and “sustainable” is the amount of interest you pay on a daily basis while making changes to the code. In “eventually repaid” the interest gets close to zero, in “sustainable” it stays at a constant level throughout the lifecycle of the project.

Compound growth

Teams stop refactoring but continue to add new features. Teams stop paying interest on the debt, and the interest is consolidated into the debt. The debt goes into a compound interest cycle and starts growing exponentially.

| Compound growth technical debt |

Similar to the the Broken Windows Theory, just applied to quality of code, if you see that non-paying technical debt is the norm in your team, you personally don't repay it either, just add new features and the debt grows.

This typically ends with postponed releases, bugs in production and a lot of tension in meetings where people blame each other for not working well or hard enough. It takes several postponed releases and a few major bugs with visibility in high management levels to realise the teams need to repay some debt to go faster.

Unfortunately, the cost of repaying debt is much higher by that point, just because of the compound interest you have to pay back that was consolidated into the debt. In other words, 2 hours invested in repaying technical debt 6 months ago, could be equivalent to 1 day of work today to repay the same amount of debt.

The problem with this type of approach is it feels you are going fast to start with because you are delivering features and the technical debt is not hurting you as much at the very beginning. The problem is you are putting yourself on the compound interest curve, instead of staying linear. Linear and compound curves look similar at the start, very different later on.

In most cases, you want to avoid ending up in this category. An example of where this type of debt is acceptable is when you need to hit a regulatory deadline, where the cost of not hitting the deadline outweighs the cost of repaying the compound debt accumulated later on.

What is the lesson learned here?

Keep in mind which category you wanna be in and make the conscious choice to invest in technical excellence where necessary. Aim for low levels of technical debt or constant medium levels, and never compound growth of debt.

Eventually repaid

|

Sustainable

|

Compound growth

| |

Type of debt.

|

You eventually repay almost all debt.

|

You keep debt at the same level by paying off all the interest.

|

You do not repay debt and interest is added to the debt and debt grows exponentially.

|

Daily repayment amount

|

Develop features and refactor the new and old code heavily.

|

Develop features and refactor the new and old code a bit.

|

Only developing new features with little to no refactoring.

|

Daily interest teams pay

|

Linear, going down.

For example, at first developers are going 24% slower because of debt, eventually, 0% slower if they repaid all of the debt.

|

Linear, constant.

For example, developers are constantly 12% slower because of the debt.

|

Grows exponentially

For example, developers are 22% slower initially but in 12 months time, they are 370% slower compared to if they had no technical debt.

|

Next steps

At Traffic Parrot, we are building a framework to help teams visualise, manage and reduce technical debt. It will help you decide on the level of technical debt that is acceptable and make the right choices at the right time so that you do not end up in the “compound growth” category. Sign up here to get notified when it's available: https://trafficparrot.com/technical_debt.html

What is your experience with technical debt?

Friday, 7 December 2018

You need Refactoring to stay Agile and competitive

Do you want to release software to production often and fast, continuously? Do you want to release high-quality software? In other words, do you want to deliver value to your customers before your competitors do it for you?

One of the building blocks for high-quality software is the practice of refactoring. If you cannot refactor but continue to add new features, your software quality will most likely degrade over time, and you will accumulate high levels of technical debt.

I have seen that recently at a project for a client where we had a bunch of good developers, but the fact that they did not refactor the code on a regular basis and continued to introduce new features meant the project came to a point where there were significant issues with every release. Pretty much most of the recent releases would not go to production on time, and if they did, there were major bugs discovered by customers.

What is your experience with lack of refactoring?

One of the building blocks for high-quality software is the practice of refactoring. If you cannot refactor but continue to add new features, your software quality will most likely degrade over time, and you will accumulate high levels of technical debt.

I have seen that recently at a project for a client where we had a bunch of good developers, but the fact that they did not refactor the code on a regular basis and continued to introduce new features meant the project came to a point where there were significant issues with every release. Pretty much most of the recent releases would not go to production on time, and if they did, there were major bugs discovered by customers.

The three main contributors to lack of refactoring, in this case, were (surprise!?)

- Not enough interest from the business in investing in technical excellence ("Continuous attention to technical excellence and good design enhances agility")

- Lack of sufficient levels of acceptance/functional UI and API testing. No confidence in the tests meant developers were afraid to change production code in case they break existing features.

- Root cause analysis of issues leads to developers having to explain themselves, which teaches developers to stay under the radar and not change anything that is not necessary.

How can we resolve these issues?

- Educate the business on the consequences and trade-offs they are making when they are not investing in technical excellence, especially refactoring in this case. For example, lack of refactoring together with continually adding new features is likely to result in lower quality software (resulting in more likely customer facing issues business have to report to FCA) and less predictable and less frequent releases (resulting in less value delivered to the customers and allowing competitors deliver that value instead).

- Build up a robust suite of tests that the developers trust — for example, a testing pyramid that would include unit tests, integration tests, BDD API acceptance tests, BDD UI acceptance tests and contract tests.

- Create a culture of "The process is to blame, not individuals". Whenever something terrible happens, figure out how to change the development process rather than point at individuals. For example, instead of blaming a developer for introducing a bug, figure out what types of tests should we start writing to avoid these types of bugs leaking to production in the future.

What is your experience with lack of refactoring?

Thursday, 6 December 2018

Invitation for a group discussion: The meaning of Agile in 2018

Join Wojciech to talk about Agile in software testing at the European Software Testing Summit "Group discussion: The meaning of Agile in 2018" on the 12th of December 2018.

Wojciech will lead a group discussion with the audience about the state of Agile software testing and development, its history and where the industry is today. We will talk about where Agile comes from, the Agile Manifesto, how we practice it today, what works well and where we can improve.

Wojciech will lead a group discussion with the audience about the state of Agile software testing and development, its history and where the industry is today. We will talk about where Agile comes from, the Agile Manifesto, how we practice it today, what works well and where we can improve.

Tuesday, 13 November 2018

Sunday, 21 October 2018

How much time it will take to build/virtualize a simple, medium and complex service?

Question

I'd like to know how much time it will take to build(Virtualize) a simple, medium and Complex Service

Answer

A simple service depending on your team's maturity and setup will typically take a few minutes to a day.

A medium service can take from a few hours to a few days.

A complex service can take from a few days to a few weeks.

Question

What are all the activities involved from start to finish

Answer

That depends on your environment and team structure. As an example, I recommend having a look at http://blog.trafficparrot.com/2016/09/five-steps-on-how-to-implement-service.html

We would like to learn more about your organisation in order to help you deliver your server virtualization

project. Please email us at consultation@trafficparrot.com to

schedule a call where we can discuss your requirements.

Saturday, 20 October 2018

If-else dynamic API mock responses

Question

How do I generate dynamic API mock/stub responses and use if + else if + else in GRPC?Answer

You can start with multiple equals blocks one after another. For example:{{#equal (jsonPath request.body '$.itemCode') '100'}}

{ countryCode: 'ES' }

{{/equal}}

{{#equal (jsonPath request.body '$.itemCode') '200'}}

{ countryCode: 'IC' }

{{/equal}}

{{#equal (jsonPath request.body '$.itemCode') '300'}}

{ countryCode: 'TW' }

{{/equal}}

{{#equal (jsonPath request.body '$.itemCode') '400'}}

{ countryCode: 'CH' }

{{/equal}}

If that does not satisfy your needs, you can do nested equals blocks.

{{#equal thing 'something'}}

{{#equal 'one' 'two'}}

Yes!

{{else}}

No!

{{/equal}}

{{else}}

Not a thing!

{{/equal}}

Saturday, 6 October 2018

Sidecar Container Pattern in Service Virtualizaion and API mocking

Traffic Parrot has first-class support for the sidecar docker container pattern.

Let's assume if you work with a Jenkins Continuous Integration pipeline and test your microservices there to get to production fast. The more external dependencies the build pipeline has, the more risk you have those dependencies will cause the build to be unstable and fail because of external dependency issues, not related to the code quality. External dependencies should be kept to a minimum. For example, run your database in Docker per build (on Jenkins Slave), not in a shared environment. It is a pattern similar to the "sidecar" container pattern recommended by Jenkins.

We recommend running your API mocks in the same process as your tests. If you decide to run your API mocks or virtual services in a separate process though, run them in a docker container. This way you are taking your automation to the next level, you have a fully automated and reproducible build pipeline.

Because of issues with dependencies, avoid using centralized instances running API mocks and virtual services outside your build pipeline. You can use them for exposing mocks to other teams and departments and for manual exploratory testing, but not in your automated CI builds.

Wednesday, 19 September 2018

Service Virtualization As Code (API Mocking As Code)

Traffic Parrot has first-class support for the service virtualization as code pattern (also called API mocking as code).

If you have any state or configuration you manage in your IT infrastructure the best solution in most cases is to version control it in a source control system like Git. For example, if you are running on Kubernetes and Docker, your whole infrastructure might be defined in a source control repository as Dockerfiles and Terraform Kubernetes configuration files. Its called Infrastructure as code

It is advised to do the same with your API mocks and virtual services. All your mocks and virtual services should be stored in a version control system such as Git. In the case of Traffic Parrot, this is possible since all request to response mapping files are stored on the filesystem as JSON files. Alternatively, you can use the JUnit TrafficParrotRule directly in your JUnit tests. This way you are taking your automation to the next level, you have a fully automated and reproducible build pipeline.

Because of the issues that can arise with manual processes, avoid having API mocks and virtual services that are updated manually and never stored in Git or a similar source control system. Store all your API mocks and virtual services in a source control system.

Wednesday, 8 August 2018

Case study: a Fortune 500 E-Commerce Company Moves To A Microservice Architecture and uses Traffic Parrot

A Fortune 500 retail e-commerce company software architects purchased Traffic Parrot Enterprise to speed up their software delivery process and reduce the costs of development and testing. In this article, we will explore the details of why they chose Traffic Parrot and how they have used it to help with development and testing of their microservice architecture.

Executive summary:

Developers working for the company are building microservices that handle the backend processing of transactions. The e-commerce website is based on the IBM WebSphere eCommerce platform. The applications they work with communicate via HTTP JSON REST APIs, GRPC, ActiveMQ via JMS and IBM MQ via JMS. All microservices are deployed in OpenShift in Docker containers.

One of the challenges with moving to a microservice architecture is that there are many components that need testing. In most cases, microservice architectures will require a Continuous Delivery environment with a Continuous Integration build pipeline for every microservice. That means we will need to test microservices in isolation in automated builds. They will be also developed and tested on developers’ desktops or laptops.

This particular company had the following technical challenges:

There are open-source tools on the market that can be deployed using Docker or run in CI. Unfortunately, they come with limited support for different protocols; you would have to use several tools and develop missing protocols in-house. They also often come with no UI or commercial support.

There are other commercial tools that support many protocols and provide advanced dynamic response templating. They also provide richer user interfaces allowing to develop more complex workflows without having to program in Java. Unfortunately, they typically require developers to use thick clients for creating the virtual services, which requires everybody to install them and pay for the licenses. They are also designed to be deployed in a central place managed by one team of administrators rather than running on any laptop or inside an automated CI build. Also, the mock definition artifacts they produce, compared to open source alternatives, are not easy to version control in Git or Subversion.

It was important for the architects to give more autonomy to the development teams and not having a centralized tool to create the virtual services and API mockups. They did not want to create another bottleneck, the centralized administrators team.

They have decided not to build the solution in-house and instead focus on the development of the e-commerce platform, and use external tools like Traffic Parrot to reduce the time to market and decrease long term maintenance costs.

They also did not need all the features that the other bigger tools provide.

Traffic Parrot was chosen by the company because it gave them a mix of what the well-established commercial and open source tools provide. It has more functionality and protocols than the open source tools. It also has a more flexible deployment and licensing model than the other commercial tools. The architects wanted a tool that was simple to use and lightweight. Traffic Parrot satisfied all of those requirements.

How developers use Traffic Parrot at the company:

To make that transition smooth and fast, the company needed tools that are designed to be used in microservice architectures by a team doing Continuous Integration.

The commercial offerings are too heavyweight to be used in the CI environment the company has in place. Also, the licensing costs are too high to justify the value of those tools.

The architects reached out to the Traffic Parrot team for a beta version with JMS support (currently JMS support is not in beta any more). The architects have decided to purchase an unlimited user license for Traffic Parrot. Over a period of 6 weeks, the Traffic Parrot team delivered the missing functionalities as per the requirements of the company architects.

It is difficult to attract and retain development talent. The company has chosen to pay for Traffic Parrot to get guaranteed support and avoid spending internal development time and energy on what would essentially be re-inventing the wheel. They focus their talents on additions to their core offering that increase the value to their end customers.

Summary

If your company is moving to a microservice architecture, you might need more than what the open source tools provide. If you need an API-mocking tool that supports many protocols but is also user-friendly UI and designed to be used with microservices and decentralized autonomous teams that follow CI, Agile and DevOps consider using Traffic Parrot.

Executive summary:

- Traffic Parrot is a tool used by developers to deliver high-quality microservices faster

- Traffic Parrot is designed for autonomous product teams developing microservices, other service virtualization tools do not work well in those environments

- Purchasing Traffic Parrot resulted in faster time to market and lower long-term maintenance costs

Value to the IT architects

'We are building a new platform based on microservices and needed a testing infrastructure that allowed all of our testers to work autonomously, using local machines to record their communication streams between their services and inside our pipelines in order to virtualize a service. Traffic Parrot, while not as fully featured as offerings from HP and IBM, offered the key capabilities that our teams needed such as HTTPS and JMS IBM MQ and a substantially smaller footprint that allowed it to run locally. We have been very pleased by Traffic Parrot's email support and responsiveness to our requests and would recommend them to other teams doing microservice-based architectures." Chief Architect at a Fortune 500 company

Developers working for the company are building microservices that handle the backend processing of transactions. The e-commerce website is based on the IBM WebSphere eCommerce platform. The applications they work with communicate via HTTP JSON REST APIs, GRPC, ActiveMQ via JMS and IBM MQ via JMS. All microservices are deployed in OpenShift in Docker containers.

One of the challenges with moving to a microservice architecture is that there are many components that need testing. In most cases, microservice architectures will require a Continuous Delivery environment with a Continuous Integration build pipeline for every microservice. That means we will need to test microservices in isolation in automated builds. They will be also developed and tested on developers’ desktops or laptops.

This particular company had the following technical challenges:

- Need to create mockups for HTTP, GRPC, JMS ActiveMQ and JMS IBM MQ services

- Need to deploy in Docker to OpenShift (on Red Hat Enterprise Linux Atomic Host)

- Need to test in Bamboo

- Need a graphical/web user interface in the mock

- Need dynamic responses (scripting) in the mock

- Need to choose JMS target queue name based on message content

| Diagram 1: Production environment setup at the company |

There are open-source tools on the market that can be deployed using Docker or run in CI. Unfortunately, they come with limited support for different protocols; you would have to use several tools and develop missing protocols in-house. They also often come with no UI or commercial support.

There are other commercial tools that support many protocols and provide advanced dynamic response templating. They also provide richer user interfaces allowing to develop more complex workflows without having to program in Java. Unfortunately, they typically require developers to use thick clients for creating the virtual services, which requires everybody to install them and pay for the licenses. They are also designed to be deployed in a central place managed by one team of administrators rather than running on any laptop or inside an automated CI build. Also, the mock definition artifacts they produce, compared to open source alternatives, are not easy to version control in Git or Subversion.

It was important for the architects to give more autonomy to the development teams and not having a centralized tool to create the virtual services and API mockups. They did not want to create another bottleneck, the centralized administrators team.

They have decided not to build the solution in-house and instead focus on the development of the e-commerce platform, and use external tools like Traffic Parrot to reduce the time to market and decrease long term maintenance costs.

They also did not need all the features that the other bigger tools provide.

Traffic Parrot was chosen by the company because it gave them a mix of what the well-established commercial and open source tools provide. It has more functionality and protocols than the open source tools. It also has a more flexible deployment and licensing model than the other commercial tools. The architects wanted a tool that was simple to use and lightweight. Traffic Parrot satisfied all of those requirements.

| Diagram 2: How the developers test microservices in isolation |

How developers use Traffic Parrot at the company:

- Develop the microservice tests in SoapUI on their laptop

- Start Traffic Parrot on their laptop

- Use the Traffic Parrot Web UI to record traffic (which could be many different protocols) and create mocks by running the SoapUI tests with the microservice that will hit Traffic Parrot in recording mode

- Push the mock definitions (request-response mappings) to Git

- Checkout SoapUI tests and Traffic Parrot mocks from Git

- Maven runs Traffic Parrot plugin to start the HTTP, ActiveMQ and IBM MQ mocks

- Maven SoapUI plugin runs the tests that hit the microservice

- The microservice communicates with Traffic Parrot, which is pretending to be the real HTTP, ActiveMQ, gRPC and IBM MQ dependencies

Value to the business

The multinational e-commerce marketplace is competitive. Moving to a microservice architecture has resulted in an increased productivity of the IT software department, resulting in decreased costs and time to market for both new and existing products.To make that transition smooth and fast, the company needed tools that are designed to be used in microservice architectures by a team doing Continuous Integration.

The commercial offerings are too heavyweight to be used in the CI environment the company has in place. Also, the licensing costs are too high to justify the value of those tools.

The architects reached out to the Traffic Parrot team for a beta version with JMS support (currently JMS support is not in beta any more). The architects have decided to purchase an unlimited user license for Traffic Parrot. Over a period of 6 weeks, the Traffic Parrot team delivered the missing functionalities as per the requirements of the company architects.

It is difficult to attract and retain development talent. The company has chosen to pay for Traffic Parrot to get guaranteed support and avoid spending internal development time and energy on what would essentially be re-inventing the wheel. They focus their talents on additions to their core offering that increase the value to their end customers.

Summary

If your company is moving to a microservice architecture, you might need more than what the open source tools provide. If you need an API-mocking tool that supports many protocols but is also user-friendly UI and designed to be used with microservices and decentralized autonomous teams that follow CI, Agile and DevOps consider using Traffic Parrot.

Next steps

- Download Traffic Parrot trial

- Contact Traffic Parrot to schedule a demo

- If you have any requirements that are missing in Traffic Parrot, please contact us to discuss potential roadmap acceleration

Tuesday, 7 August 2018

Tuesday, 31 July 2018

GRPC API Mocking and Service Virtualization

Are you looking for a tool that supports gRPC API Mocking or Service Virtualization? Traffic Parrot version 4.0.0 and later provides support for unary gRPC and server streaming gRPC.

If you would like to know more, please see the gRPC API mocking documentation.

If you would like to try gRPC mocking yourself, download Traffic Parrot now.

If you would like to know more, please see the gRPC API mocking documentation.

If you would like to try gRPC mocking yourself, download Traffic Parrot now.

Thursday, 19 July 2018

Traffic Parrot 4.0.0 released

We have just released Traffic Parrot 4.0.0, it is available for download on trafficparrot.com.

It includes new features such as:

It includes new features such as:

- Support for mocking and virtualizing gRPC APIs and services

- Visual XML editor

- Extended support for SOAP - matching on request body SOAP values

- HTTP(S) stateful scenarios available via the Web UI

- Signing SOAP messages

Sunday, 15 July 2018

Video: How software testers can test microservices

Wojciech has given a talk at the Ministry of Testing - Brighton and Hove testers meetup on the 18th April 2018. Thank you to the organisers for a great event and for having us!

You can find a recording of the presentation below (25 minutes).

Key takeaways from the presentation:

You can find a recording of the presentation below (25 minutes).

Key takeaways from the presentation:

- A case study from a media company where QAs tested microservices running on Docker and Kubernetes

- Microservices testing risks

- How QA teams can provide value in a microservices world

- Typical QA team responsibilities with microservices

Sunday, 17 June 2018

Video: How software testers can add value in a world of microservices?

Last month Wojciech talked at the National Software Testing Conference in London about how software testers can add value in a world of microservices.

The video presentation can be purchased from 31 Media http://www.softwaretestingconference.com/product/video-presentations-2018/. Please note we do not receive any commision on the sales of that video, all money goes to 31Media.

The video presentation can be purchased from 31 Media http://www.softwaretestingconference.com/product/video-presentations-2018/. Please note we do not receive any commision on the sales of that video, all money goes to 31Media.

Sunday, 10 June 2018

How to mock and also passthrough HTTP(S) requests to multiple systems?

You can use API mocks to isolate yourself from backed and third party dependencies. There are cases where it would be advisable to lookup responses in the API mock but when none are found, forward (passthrough) the request to the real backed or third party service. In Traffic Parrot you can passthrough to one domain at a time. So how to deal with a situation when there are more than one domain you would like to mock? The solution is to use multiple Traffic Parrot instances, each mocking and passing though to a different domain.

|

| Mock and pass through to multiple domains |

To configure passthough, open Traffic Parrot and click on HTTP->Passthough.

|

| How to configure passthough |

Saturday, 26 May 2018

Thursday, 24 May 2018

Updated Privacy Policy

We have updated the Privacy Policy. Please find the latest version available at https://trafficparrot.com/privacy-policy.html

Tuesday, 15 May 2018

How software testers can add value in a microservices world?

Ever wondered how to test microservices?

Wojciech will talk at the National Software Testing Conference in London on the 23rd of May 2018 about just that!

He will talk about a global media company that decided to move their infrastructure to Docker/Kubernetes and microservices. He will cover QAs daily responsibilities in that environment, microservices testing risks and how QAs can provide value in a microservices environment where 93% of code test coverage is done by automated tests written by developers.

Sign up now for this talk and many more at http://www.softwaretestingconference.com/

Wojciech will talk at the National Software Testing Conference in London on the 23rd of May 2018 about just that!

He will talk about a global media company that decided to move their infrastructure to Docker/Kubernetes and microservices. He will cover QAs daily responsibilities in that environment, microservices testing risks and how QAs can provide value in a microservices environment where 93% of code test coverage is done by automated tests written by developers.

Sign up now for this talk and many more at http://www.softwaretestingconference.com/

Thursday, 26 April 2018

Dynamic API mocks

We have just published a new tutorial on how to create dynamic responses in your API mocks.

Saturday, 17 March 2018

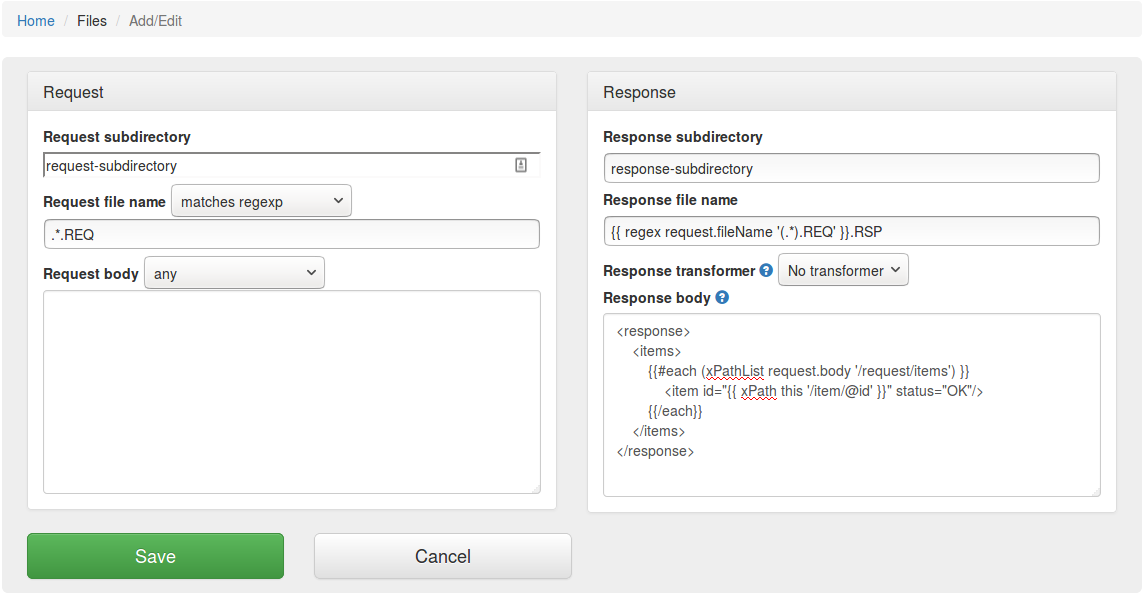

Simulating systems communicating with file transfers to save time and enable automated testing

Sending and receiving files is a common way of exchanging information in many industries. Two main ones are banking (for example SWIFT) and telecommunications (for example mobile number porting). They are typically shared over local filesystems, NFS or FTP/SFTP.

This raises issues like:

You can:

For more information have a look at the files transfers documentation.

This raises issues like:

- Setting up test data in the third party or backend systems slows down developers and testers on your team

- Creating response files based on request files without the third party or backend system is done manually

- Creating files manually will not work when you are running automated tests

- Crafting files manually is error-prone

- Crafting files manually is time-consuming

- Sometimes response files need to be present within only a few seconds because the application times out otherwise, so there is no time to create them manually

|

| Simulating systems communicating with files |

- Consume request files

- Produce response files

- Record and replay communication with files

- Create manually request to response file mappings

- Generate dynamic response file names (scriptability)

- Generate dynamic response file content based on request file content (scriptability)

|

| Example of a request to response file mapping |

For more information have a look at the files transfers documentation.

Monday, 5 March 2018

Get access to Traffic Parrot Beta

Do you need service virtualization, API mocking or system simulation for one of these protocols?

Join the Traffic Parrot Beta programme to get access to them and other features.

- FIX

- FAST

- FIXatdl

- SWIFT

- AMQP

- MQTT

- RabbitMQ

- SonicMQ

- CORBA

- GRPC

- FTP

- SFTP

- .NET WCF

- RMI

- MTP

- TIBCO EMS

- CICS

- SAP RFC

- JDBC

- Mongo

Join the Traffic Parrot Beta programme to get access to them and other features.

Saturday, 17 February 2018

Running free IBM® MQ for Developers

Sometimes when mocking or virtualising systems communicating with IBM WebSphere MQ you do not have the admin rights to create new queues on your existing IBM instance, that could be used by Traffic Parrot.

One solution is to use the free IBM MQ for Developers.

We have created a tutorial how to spin it up in a mater of a couple of hours by using AWS free tier EC2 Linux box.

View the tutorial here.

One solution is to use the free IBM MQ for Developers.

We have created a tutorial how to spin it up in a mater of a couple of hours by using AWS free tier EC2 Linux box.

View the tutorial here.

Thursday, 11 January 2018

The Best QA Software Testing Directory (Top Tools and Companies in 2018)

Check out position number 9 in the The Best QA Software Testing Directory!

Friday, 5 January 2018

Simulating IBM® MQ JMS systems with dynamic mock responses

We have just added a new section to the tutorials about Simulating IBM® MQ JMS systems with dynamic mock responses. It will show you how to create dynamic mock JMS IBM MQ responses. Read more here.

Subscribe to:

Comments (Atom)